NVIDIA's Advanced H200 Accelerator and Jupiter Supercomputer Announced for 2024

NVIDIA, a leader in the field of server GPU products, is gearing up to enhance its current-generation offerings with the introduction of the H200 accelerator, equipped with the latest HBM3E memory. This announcement, made at the SC23 tradeshow, follows NVIDIA's continuous efforts to upgrade its product line with cutting-edge technology.

The H200 Accelerator: A Significant Upgrade with HBM3E Memory

Overview of the H200 Accelerator

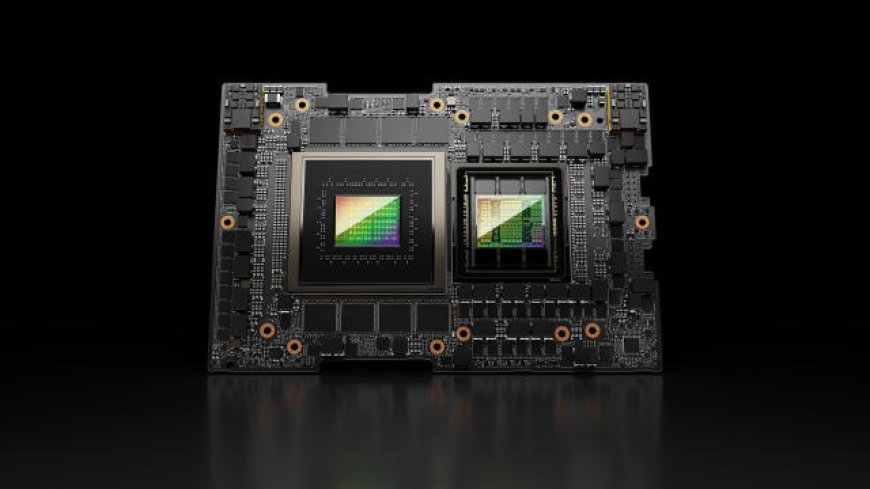

The H200 accelerator is NVIDIA's response to the evolving demands of the server GPU market, particularly in the generative AI space. The H200 is designed as a mid-generation upgrade for the Hx00 product line, featuring faster and higher-capacity HBM3E memory. This upgrade is set to substantially improve the performance of NVIDIA's accelerators in memory bandwidth-bound workloads and enable handling of larger workloads.

Also check Vastarmor launches Radeon RX 7700 XT

Improved Memory Capacity and Bandwidth

With HBM3E memory, the H200 will see its memory capacity increase from 80GB to 141GB, and memory bandwidth is expected to jump from 3.35TB/second to around 4.8TB/second. This represents a significant enhancement in terms of both capacity and speed, addressing the needs of large-scale AI models and applications.

Technical Specifications

The H200's memory will operate at approximately 6.5Gbps/pin, a notable increase from the H100's 5.3Gbps/pin. Despite not fully exploiting the maximum potential of HBM3E's rated speeds, this retrofit to an existing GPU design still marks a substantial improvement in memory performance.

NVIDIA's Approach to High-End Server Accelerators

Challenges and Expectations

Shipping a part with all six working memory stacks, as planned for the H200, requires a near-perfect chip. This could mean that the H200 might be a lower volume, lower yielding part compared to the H100 accelerators. NVIDIA's decision to not fully utilize the 144GB physical capacity of the HBM3E memory (opting for 141GB instead) is also a strategic move to balance yield rates and performance.

Computational Throughput and Real-World Performance

While NVIDIA has not disclosed any significant improvements in raw computational throughput for the H200 over its predecessor, the real-world performance is expected to benefit greatly from the enhanced memory capabilities. The HGX H200 cluster's computational performance is anticipated to match the current HGX H100 clusters.

The HGX H200 Platform: Ensuring Compatibility with Existing Systems

Integration with Current H100 Systems

NVIDIA's announcement of the HGX H200 platform, an upgrade of the 8-way HGX H100, is noteworthy. This new platform utilizes the H200 accelerator while ensuring compatibility with existing H100 systems. The HGX carrier boards, which form the foundation of NVIDIA's H100/H200 family, facilitate the integration of these high-performance GPUs into customizable server systems.

Conclusion: NVIDIA's Forward-Thinking Strategy for Server GPUs

NVIDIA's announcement of the H200 accelerator and the Jupiter supercomputer at SC23 highlights the company's commitment to staying at the forefront of server GPU technology. By incorporating the latest HBM3E memory into their accelerators and ensuring backward compatibility with existing systems, NVIDIA is poised to meet the growing demands of the server market, particularly in areas like generative AI. The H200's expected release in the second quarter of 2024 marks a significant milestone in NVIDIA's ongoing journey to provide cutting-edge technology solutions.